Using Machine Learning for Structural Identification¶

This notebook provides a demonstration of how a simple set of descriptors computed by freud can be coupled with machine learning for structural identification. The set of descriptors used here are not enough to identify complex crystal structures, but this notebook provides an introduction. For a more powerful set of descriptors, see the paper Machine learning for crystal identification and discovery (Spellings 2018) and the library pythia, both of which use freud for their computations.

[1]:

import warnings

import freud

import matplotlib.cm

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

warnings.filterwarnings("ignore")

We generate sample body-centered cubic, face-centered cubic, and simple cubic structures. Each structure has at least 4000 particles.

[2]:

N = 4000

noise = 0.04

structures = {}

n = round((N / 2) ** (1 / 3))

structures["bcc"] = freud.data.UnitCell.bcc().generate_system(n, sigma_noise=noise)

n = round((N / 4) ** (1 / 3))

structures["fcc"] = freud.data.UnitCell.fcc().generate_system(n, sigma_noise=noise)

n = round((N / 1) ** (1 / 3))

structures["sc"] = freud.data.UnitCell.sc().generate_system(n, sigma_noise=noise)

for name, (box, positions) in structures.items():

print(name, "has", len(positions), "particles.")

bcc has 4394 particles.

fcc has 4000 particles.

sc has 4096 particles.

Next, we compute the Steinhardt order parameters \(q_l\) for \(l \in \{4, 6, 8, 10, 12\}\).

We use the Voronoi neighbor list, removing neighbors whose Voronoi facets are small.

[3]:

def get_features(box, positions, structure):

voro = freud.locality.Voronoi()

voro.compute(system=(box, positions))

nlist = voro.nlist.copy()

nlist.filter(nlist.weights > 0.1)

features = {}

for l in [4, 6, 8, 10, 12]:

ql = freud.order.Steinhardt(l=l, weighted=True)

ql.compute(system=(box, positions), neighbors=nlist)

features[f"q{l}"] = ql.particle_order

return features

[4]:

structure_features = {}

for name, (box, positions) in structures.items():

structure_features[name] = get_features(box, positions, name)

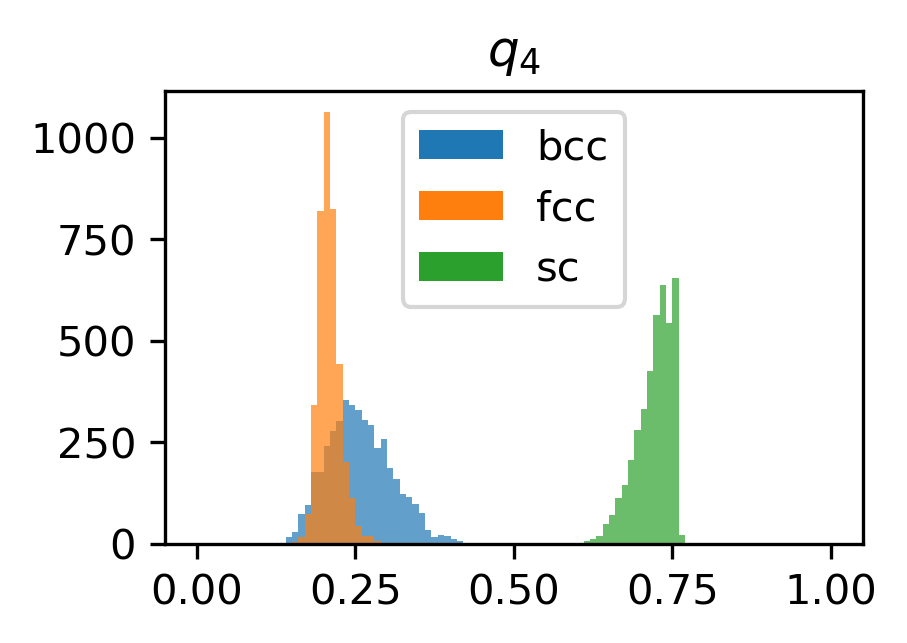

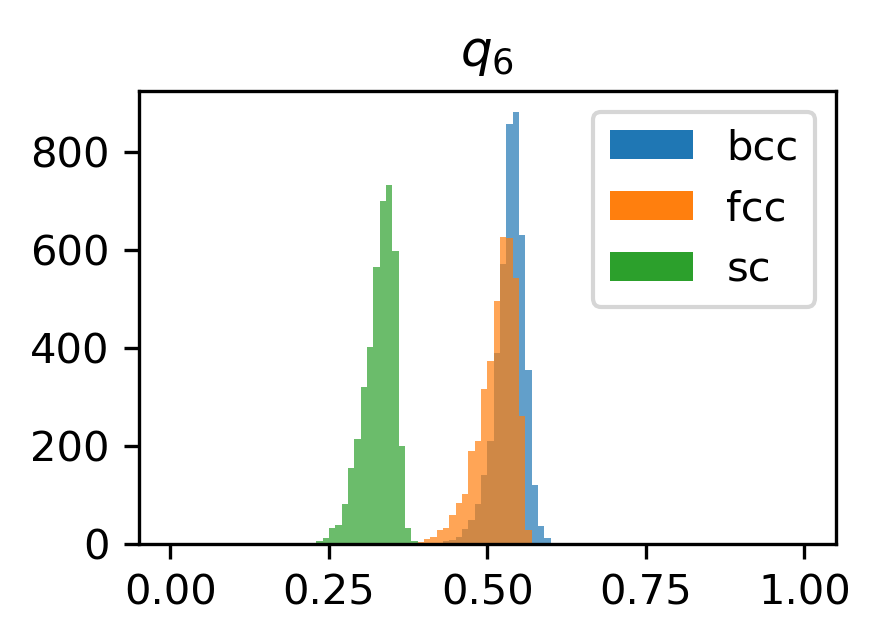

Here, we plot a histogram of the \(q_4\) and \(q_6\) values for each structure.

[5]:

for l in [4, 6]:

plt.figure(figsize=(3, 2), dpi=300)

for name in structures.keys():

plt.hist(

structure_features[name][f"q{l}"],

range=(0, 1),

bins=100,

label=name,

alpha=0.7,

)

plt.title(rf"$q_{{{l}}}$")

plt.legend()

for lh in plt.legend().legendHandles:

lh.set_alpha(1)

plt.show()

Next, we will train a Support Vector Machine to predict particles’ structures based on these Steinhardt \(Q_l\) descriptors. We build pandas data frames to hold the structure features, encoding the structure as an integer. We use train_test_split to train on part of the data and test the model on a separate part of the data.

[6]:

structure_dfs = {}

for i, structure in enumerate(structure_features):

df = pd.DataFrame.from_dict(structure_features[structure])

df["class"] = i

structure_dfs[structure] = df

[7]:

df = pd.concat(structure_dfs.values()).reset_index(drop=True)

[8]:

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import normalize

from sklearn.svm import SVC

[9]:

X = df.drop("class", axis=1).values

X = normalize(X)

y = df["class"].values

X_train, X_test, y_train, y_test = train_test_split(

X, y, test_size=0.33, random_state=42

)

svm = SVC()

svm.fit(X_train, y_train)

print("Score:", svm.score(X_test, y_test))

Score: 0.9868995633187773

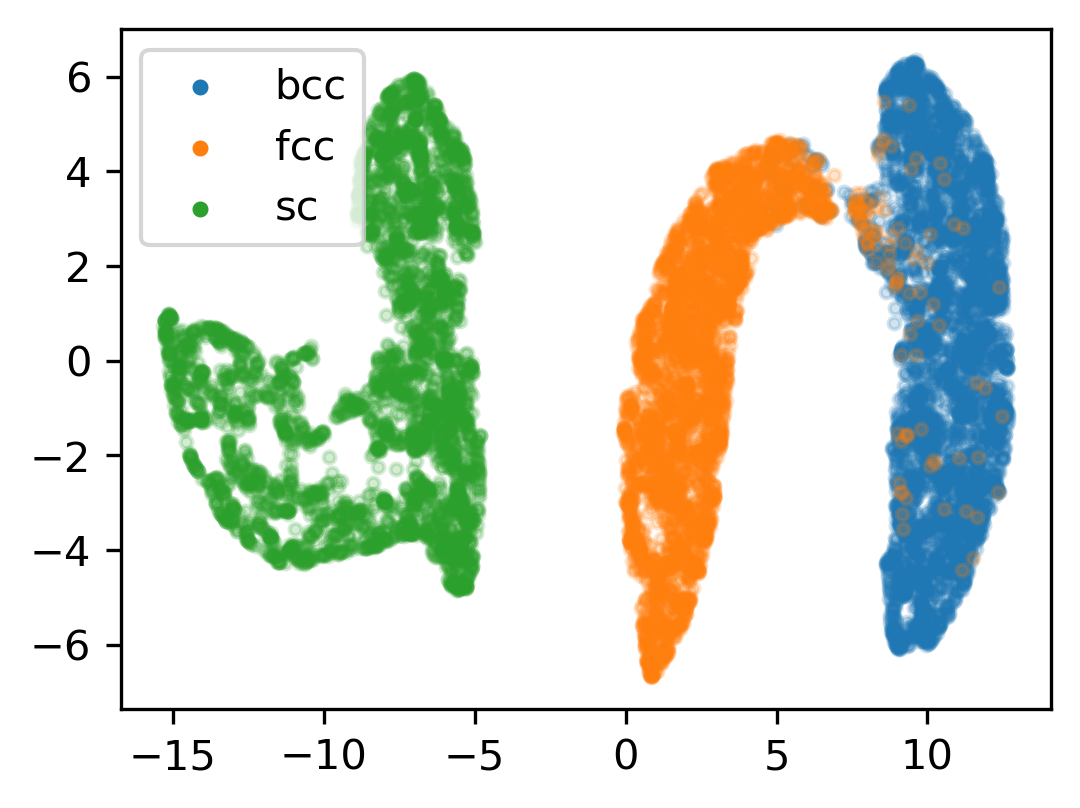

Finally, we use the Uniform Manifold Approximation and Projection method (McInnes 2018, GitHub repo) to project the high-dimensional descriptors into a two-dimensional plot. Notice that some bcc particles overlap with fcc particles. This can be expected from the noise that was added to the structures. The particles that were incorrectly classified by the SVM above are probably located in this overlapping region.

[10]:

from umap import UMAP

umap = UMAP(random_state=42)

X_reduced = umap.fit_transform(X)

[11]:

plt.figure(figsize=(4, 3), dpi=300)

for i in range(max(y) + 1):

indices = np.where(y == i)[0]

plt.scatter(

X_reduced[indices, 0],

X_reduced[indices, 1],

color=matplotlib.cm.tab10(i),

s=8,

alpha=0.2,

label=list(structure_features.keys())[i],

)

plt.legend()

for lh in plt.legend().legendHandles:

lh.set_alpha(1)

plt.show()